Optimization enables businesses to be more efficient when it comes to complex, interrelated processes – like delivering products to the market, matching orders with production capabilities, scheduling large work forces, and more.

We all are experiencing the result of what happens when such processes can’t be optimized–just look at our current supply chain opportunities.

As optimization has become more prevalent in the market, it’s also faced its challenges. Optimization is a mathematical computation. Actually, it’s a wide range of mathematical computations and algorithms.

If you look at Wikipedia, you’ll find 157 pages of “optimization algorithms” defined. That alone should give you a hint at the complexity of solving these problems.

Business experts represent business problems mathematically. These problems are programmed and then a computer solves the math computation, using whatever algorithm or approach that best suits its goal.

Businesses often use the results for analytical, decision-support computations as part of larger business applications and processes.

Today, optimization is facing some significant challenges

As the volume of business data that’s included in these computations expands geometrically, we are reaching the limits for classical computing architectures to meet business needs.

While we continue to solve these computations, often the time-to-solve is far greater than the time-to-respond to actually use the information.

For example, if you need to load a set of trucks in 2 hours, but it takes 7 hours to compute the optimization for how to load them to be most effective, that’s not exactly timely.

Additionally, as our businesses grow more complex and interdependent upon a variety of factors, the complexity of the computations grow. Which means we have to scale the systems to be able to actually solve the problem.

It’s the expansion of these problems that often causes the issues with regard to processing.

Why the Optimization Bottleneck?

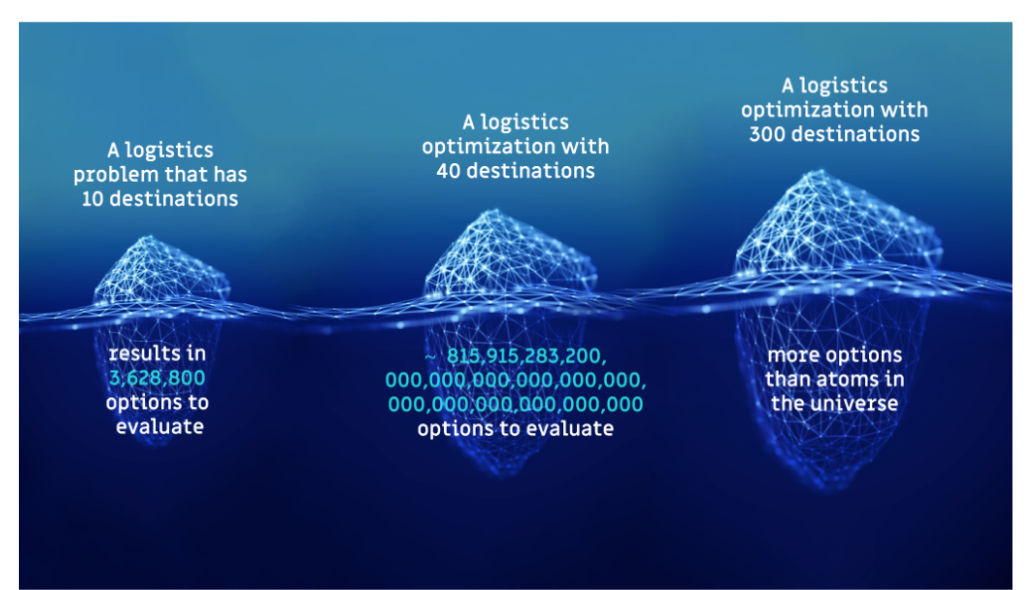

What many of us don’t know is the impact of increasing the aspects that are included in the optimization itself. I’ve worked around “solvers” since they first entered the marketplace, and I’m still surprised every time I see the insights in the image below.

The above assumes a logistics optimization–aka delivering product to various locations.

It’s also called the Traveling Salesman Problem (TSP), where a salesman is visiting those destinations.

Think of it as optimizing the route taken so that everything is delivered on time, at the lowest cost and in the shortest period of time.

Doesn’t sound that difficult, now does it?

It is.

As you can see, as the number of destinations increases, the size of the potential optional routes that need to be evaluated increases geometrically. Although I’m still not sure how someone managed to count all the atoms in the universe.

This is why classical systems are running into challenges, even though the problems themselves may look fairly straightforward.

It’s the permutations of potential solutions that often drives the large scale. Which then drives classical computers to their edge of capability.

Why Quantum Optimization

That’s why so many believe optimization will be the first production application for quantum computing. The very foundations of quantum, aka multi-dimensional, massively parallel processing, make it very applicable to the highly matrixed computations that drive optimization.

Quantum computers allow you to represent the variables, constraints, and objectives within a multi-dimensional state. Which means that quantum computing better represents the real world, including inter-relationships and dependencies.

As the data is processed, it changes states to represent the probabilistic cause and effect relationships, and the real-world scenarios that result.

So you get to view the probable outcomes of the various business decisions you could make and select the one that best meets your demands.

Thanks to the depth of data they can process, quantum computers deliver more precise results. The results are a better representation of your real-world complexities and nuances.

Unlike classical computations, there’s no need for abstractions, assumptions, or data samples compressed to be small enough to process on a classical computer.

Quantum computers also return a diversity of answers, not just one as with classical systems.

Every answer that meets the desired optimized state is returned. This is a much richer set of decision data vs the classical approaches that only provide a single answer to review.

The Bottom Line

As optimization problems demand more computing power than classical systems can provide, quantum computing provides a solution.

Even better, you’ll find your business decisions have more context that aligns to real-world situations, given the diversity of results you’ll have to choose from.

It goes way beyond speed. It’s not a choice of classical instead of quantum, but how you can have both classical and quantum work together to improve the outcomes to solving optimization problems that impact your bottom line.